[liveblog] The future of libraries

October 7th, 2015

I’m at a Hubweek event called “Libraries: The Next Generation.” It’s a panel hosted by the Berkman Center with Dan Cohen, the executive director of the DPLA; Andromeda Yelton, a developer who has done work with libraries; and Jeffrey Schnapp of metaLab

|

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people. |

Sue Kriegsman of the Center introduces the session by explaining Berkman’s interest in libraries. “We have libraries lurking in every corner…which is fabulous.” Also, Berkman incubated the DPLA. And it has other projects underway.

Dan Cohen speaks first. He says if he were to give a State of the Union Address about libraries, he’d say: “They are as beloved as ever and stand at the center of communities” here and around the world. He cites a recent Pew survey about perspectives on libraries:“ …libraries have the highest approval rating of all American institutions. But, that’s fragile.” libraries have the highest approval rating of all American institutions. But, he warns, that’s fragile. There are many pressures, and libraries are chronically under-funded, which is hard to understand given how beloved they are.

First among the pressures on libraries: the move from print. E-book adoption hasn’t stalled, although the purchase of e-books from the Big Five publishers compared to print has slowed. But Overdrive is lending lots of ebooks. Amazon has 65% of the ebook market, “a scary number,” Dan says. In the Pew survey a couple of weeks ago, 35% said that libraries ought to spend more on ebooks even at the expense of physical books. But 20% thought the opposite. That makes it hard to be the director of a public library.

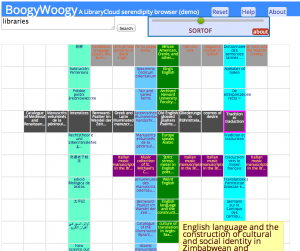

If you look at the ebook market, there’s more reading go on at places like the DPLA. (He mentions the StackLife browser they use, that came out of the Harvard Library Innovation Lab that I used to co-direct.) Many of the ebooks are being provided straight to a platform (mainly Amazon) by the authors.

There are lots of jobs public libraries do that are unrelated to books. E.g., the Boston Public Library is heavily used by the homeless population.

The way forward? Dan stresses working together, collaboration. “DPLA is as much a social, collaborative project as it is a technical project.” It is run by a community that has gotten together to run a common platform.

And digital is important. We don’t want to leave it to Jeff Bezos who “wants to drop anything on you that you want, by drone, in an hour.”

Andromeda: She says she’s going to talk about “libraries beyond Thunderdome,” echoing a phrase from Sue Kriegman’s opening comments. “My real concern is with the skills of the people surrounding our crashed Boeing.” Libraries need better skills to evaluate and build the software they need. She gives some exxamples of places where we see a tensions between library values and code.

1. The tension between access and privacy. Physical books leave no traces. With ebooks the reading is generally tracked. Overdrive did a deal so that library patrons who access ebooks get notices from Amazon when their loan period is almost up. Adobe does rights management, with reports coming page by page about what people are reading. “Unencrypted over the Internet,” she adds. “You need a fair bit of technical knowledge to see that this is happening,” she says. “It doesn’t have to be this way.” “It’s the DRM and the technology that have these privacy issues built in.”

She points to the NYPL Library Simplified program that makes it far easier for non-techie users. It includes access to Project Gutenberg. Libraries have an incentive to build open architectures that support privacy. But they need the funding and the technical resources.

She cites the Library Freedom Project that teaches librarians about anti-surveillance technologies. They let library users browse the Internet through TOR, preventing (or at least greatly inhibit) tracking. They set up the first library TOR node in New Hampshire. Homeland Security quickly suggested that they stop. But there was picketing against this, and the library turned it back on. “That makes me happy.”

2. Metadata. She has us do an image search for “beautiful woman” at Google. They’re basically all white. Metadata is sometimes political. She goes through the 200s of the Dewey Decimal system: 90% Christian. “This isn’t representative of human knowledge. It’s representative of what Melvil Dewey thought maps to human knowledge.” Libraries make certain viewpoints more computationally accessible than others.“ Our ability to write new apps is only as good as the metadata under them.” Our ability to write new apps is only as good as the metadata under them. “As we go on to a more computational library world — which is awesome — we’re going to fossilize all these old prejudices. That’s my fear.”

“My hope is that we’ll have the support, conviction and empathy to write software, and to demand software, that makes our libraries better, and more fair.”

Jeffrey: He says his peculiar interest is in how we use space to build libraries as architectures of knowledge. “Libraries are one of our most ancient institutions.” “Libraries have constantly undergone change,” from mausoleums, to cloisters, to warehouses, places of curatorial practice, and civic spaces. “The legacy of that history…has traces of all of those historical identities.” We’ve always faced the question “What is a library?” What are it’s services? How does it serve its customers? Architects and designers have responded to this, assuming a set of social needs, opportunities, fantasies, and the practices by which knowledge is created, refined, shared. “These are all abiding questions.”

Contemporary architects and designers are often excited by library projects because it crystallizes one of the most central questions of the day: “How do you weave together information and space?” We’re often not very good at that. The default for libraries has been: build a black box.

We have tended to associate libraries with collections. “If you ask what is a library?, the first answer you get is: a collection.” But libraries have also always been about the making of connections, i.e., how the collections are brought alive. E.g., the Alexandrian Librarywas a performance space. “What does this connection space look like today?” In his book with Matthew Battles, they argue that while we’ve thought of libraries as being a single institution, in fact today there are now many different types of libraries. E.g., the research library as an information space seems to be collapsing; the researchers don’t need reading rooms, etc. But civic libraries are expanding their physical practices.

We need to be talking about many different types of libraries, each with their own services and needs. The Library as an institution is on the wane. We need to proliferate and multiply the libraries to serve their communities and to take advantage of the new tools and services. “We need spaces for learning,” but the stack is just one model.

Discussion

Dan: Mike O’Malley says that our image of reading is in a salon with a glass of port, but in grad school we’re taught to read a book the way a sous chef guts a fish. A study says that of academic ebooks, 75% of scholars read less than 50 pages of them. [I may have gotten that slightly wrong. Sorry.] Assuming a proliferation of forms, what can we do to address them?

Jeffrey: The presuppositions about how we package knowledge are all up for grabs now. “There’s a vast proliferation of channels. ‘And that’s a design opportunity.’”There’s a vast proliferation of channels. “And that’s a design opportunity.” How can we create audiences that would never have been part of the traditional distribution models? “I’m really excited about getting scholars and creative practitioners involved in short-form knowledge and the spectrum of ways you can intersect” the different ways we use these different forms. “That includes print.” There’s “an extraordinary explosion of innovation around print.”

Andromeda: “Reading is a shorthand. Library is really about transforming people and one another by providing access to information.” Reading is not the only way of doing this. E.g., in maker spaces people learn by using their hands. “How can you support reading as a mode of knowledge construction?” Ten years ago she toured Olin College library, which was just starting. The library had chairs and whiteboards on castors. “This is how engineers think”: they want to be able to configure a space on the fly, and have toys for fidgeting. E.g., her eight year old has to be standing and moving if she’s asked a hard question. “We need to think of reading as something broader than dealing with a text in front of you.”

Jeffrey: The DPLA has a location in the name — America —. The French National Library wants to collect “the French Internet.” But what does that mean? The Net seems to be beyond locality. What role does place play?

Dan: From the beginning we’ve partnered with Europeana. We reused Europeana’s metadata standard, enabling us to share items. E.g., Europeana’s 100th anniversary of the Great War web site was able to seamlessly pull in content from the DPLA via our API, and from other countries. “The DPLA has materials in over 400 languages,” and actively partners with other international libraries.

Dan points to Amy Ryan (the DPLA chairperson, who is in the audience) and points to the construction of glass walls to see into the Boston Public Library. This increases “permeability.” When she was head of the BPL, she lowered the stacks on the second floor so now you can see across the entire floor. Permeability “is a very smart architecture” for both physical and digital spaces.

Jeff: Rendering visible a lot of the invisible stuff that libraries do is “super-rich,” assuming the privacy concerns are addressed.

Andromeda: Is there scope in the DPLA metadata for users to address the inevitable imbalances in the metadata?

Dan: We collect data from 1,600 different sources. We normalize the data, which is essential if you want to enable it for collaboration. Our Metdata Application Profile v. 4 adds a field for annotation. Because we’re only a dozen people, we haven’t created a crowd-sourcing tool, but all our data is CC0 (public domain) so anyone who wants to can create a tool for metadata enhancement. If people do enhance it, though, we’ll have to figure out if we import that data into the DPLA.

Jeffrey: The politics of metadata and taxonomy has a long history. The Enlightenment fantasy is for a universal metadata school. What does the future look like on this issue?

Andromeda: “You can have extremely crowdsourced metadata, but then you’re subject to astroturfing”You can have extremely crowdsourced metadata, but then you’re subject to astroturfing and popularity boosting results for bad reasons. There isn’t a great solution except insofar as you provide frameworks for data that enable many points of view and actively solicit people to express themselves. But I don’t have a solution.

Dan: E.g., at DPLA there are lots of ways entering dates. We don’t want to force a scheme down anyone’s throat. But the tension between crowdsourced and more professional curation is real. The Indianapolis Museum of Art allowed freeform tagging and compared the crowdsourced tags vs. professional. Crowdsourced: “sea” and “orange” were big, which curators generally don’t use.

Q&A

Q: People structure knowledge differently. My son has ADHD. Or Nepal, where I visited recently.

A: Dan: It’s great that the digital can be reformatted for devices but also for other cultural views. “That’s one of the miraculous things about the digital.” E.g., digital book shelves like StackLife can reorder themselves depending on the query.

Jeff: Yes, these differences can be profound. “Designing for that is a challenge but really exciting.”

Andromeda: This is a why it’s so important to talk with lots of people and to enable them collaborate.

me: Linked data seems to resolve some of these problems with metadata.

Dan: Linked Data provides a common reference for entities. Allows harmonizing data. The DPLA has a slot for such IDs (which are URIs). We’re getting there, but it’s not our immediate priority. [Blogger’s perogative: By having many references for an item linked via “sameAs” relationships can help get past the prejudice that can manifest itself when there’s a single canonical reference link. But mainly I mean that because Linked Data doesn’t have a single record for each item, new relationships can be added relatively easily.]

Q; How do business and industry influence libraries? E.g., Google has images for every place in the world. They have scanned books. “I can see a triangulation happening. Virtual libraries? Virtual spaces?

Andromeda: (1) Virtual tech is written outside of libraries, almost entirely. So it depends on what libraries are able to demand and influence. (2) Commercial tech sets expectations for what users experiences should be like, which libraries may not be able to support. (3) “People say “Why do we need libraries? It’s all online and I can pay for it.” No, it’s not, and no, not everyone can.”People say “Why do we need libraries? It’s all online and I can pay for it.” No, it’s not, and no, not everyone can. Libraries should up their tech game, but there’s an existential threat.

Jeffrey: People use other spaces to connect to knowledge, e.g. coffee houses, which are now being incorporated into libraries. Some people are anxious about that loss of boundary. Being able to eat, drink, and talk is a strong “vision statement” but for some it breaks down the world of contemplative knowledge they want from a library.

Q: The National Science and Technology Library in China last week said they have the right to preserve all electronic resources. How can we do that?

Dan: Libraries have long been sites for preservation. In the 21st century we’re so focused on getting access now now now, we lose sight that we may be buying into commercial systems that may not be able to preserve this. This is the main problem with DRM. Libraries are in the forever business, but we don’t know where Amazon will be. We don’t know if we’ll be able to read today’s books on tomorrow devices. E.g., “I had a subscription to Oyster ebook service, but they just went out of business. There go all my books. ”I had a subscription to Oyster ebook service, but they just went out of business. There go all my books. Open Access advocacy is going to play a critical role. Sure, Google is a $300B business and they’ll stick around, but they drop services. They don’t have a commitment like libraries and nonprofits and universities do to being in the forever business.

Jeff: It’s a huge question. It’s really important to remember that the oldest digital documents we have are 50 yrs old which isn’t even a drop in the bucket. There’s far from universal agreement about the preservation formats. Old web sites, old projects, chunks of knowledge, of mine have disappeared. What does it mean to preserve a virtual world? We need open standards, and practices [missed the word] “Digital stuff is inherently fragile.”

Andromeda: There are some good things going on in this space. The Rapid Response Social Media project is archiving (e.g., #Ferguson). Preserving software is hard: you need the software system, the hardware, etc.

Q: Distintermediation has stripped out too much value. What are your thoughts on the future of curation?

Jeffrey: There’s a high level of anxiety in the librarian community about their future roles. But I think their role comes away as reinforced. It requires new skills, though.

Andromeda: In one pottery class the assignment was to make one pot. In another, it was to make 50 pots. The best pots came out of the latter. When lots of people can author lots of stuff, it’s great. That makes curation all the more critical.

Dan: the DPLA has a Curation Core: librarians helping us organize our ebook collection for kids, which we’re about to launch with President Obama. Also: Given the growth in authorship, yes, a lot of it is Sexy Vampires, but even with that aside, we’ll need librarians to sort through that.

Q: How will Digital Rights Management and copyright issues affect ebooks and libraries? How do you negotiate that or reform that?

Dan: It’s hard to accession a lot of things now. For many ebooks there’s no way to extract them from their DRM and they won’t move into the public domain for well over 100 years. To preserve things like that you have to break the law — some scholars have asked the Library of Congress for exemptions to the DMCA to archive films before they decay.

Q: Lightning round: How do you get people and the culture engaged with public libraries?

Andromeda: Ask yourself: Who’s not here?

Jeffrey: Politicians.

Dan: Evangelism